Submitted URL Blocked by Robots.txt? Here's How to Fix the Code That’s Hiding Your Site From Google

Table of Contents

- Key takeaways

- What does "submitted URL blocked by robots.txt" mean?

- Why a page might be blocked by robots.txt

- What is the "indexed though blocked by robots.txt" error?

- How to fix "submitted URL blocked by robots.txt"

- How to fix "indexed though blocked by robots.txt"

- What not to do with your robots.txt file

- Still seeing "blocked by robots.txt" after fixing?

- Final checks: Make sure your pages are visible to Google

Key takeaways

- A “submitted URL blocked by robots.txt” error means Google can't crawl a page you've asked it to index, often due to conflicting instructions in your robots.txt file.

- Pages can still be indexed even if blocked, but without crawl access, search engines can't evaluate or display meaningful content.

- To fix the issue, review your robots.txt file, identify disallow directives affecting important pages, and adjust them accordingly.

- Use tools like the Google Search Console Robots.txt Tester and URL Inspection to validate changes and request reindexing.

- Popular SEO tools let you view and edit robots.txt rules directly from your site's dashboard, depending on the platform.

When Google Search Console shows the error “submitted URL blocked by robots.txt,” it usually means your content can't be crawled—making it nearly impossible for Google's crawler to evaluate, understand, or rank it properly.

This isn’t some small hiccup you can ignore. It’s more like accidentally locking your best content in a closet and forgetting to give Google the key. That one line in your robots.txt file could be the reason your most important pages are invisible across search engines.

The good news? You don’t need to be a technical expert to fix it. As an ecommerce SEO agency, Lantern Sol helps brands spot indexing issues early—before they start draining traffic or burying your best pages.

In this article, we’ll walk through what the error means, how to fix it, and how to avoid similar problems in your page indexing report.

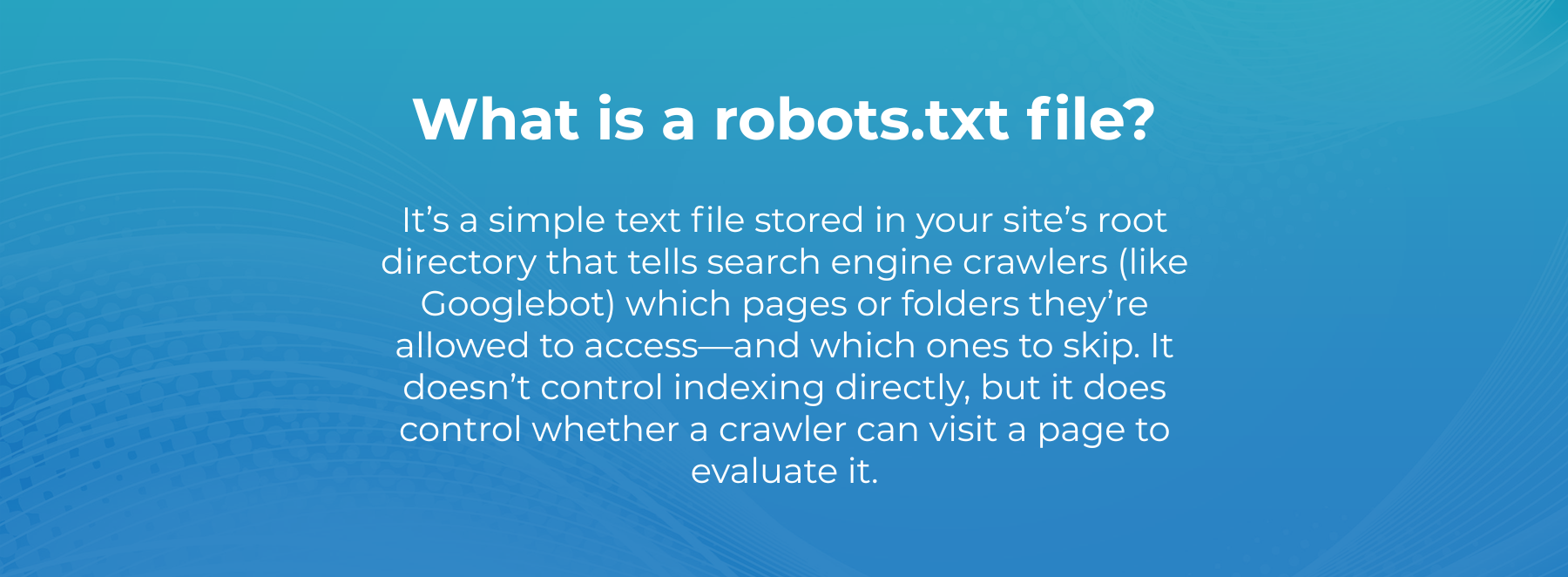

What does "submitted URL blocked by robots.txt" mean?

This error means you’ve submitted a page to Google (usually through a sitemap or an SEO plugin), but your robots.txt file is saying, “No entry.” It’s a classic case of mixed messages: you’re asking Google to index the page but also telling its crawler not to visit it.

That’s because the robots.txt file tells search engine crawlers—also known as user agents (like Googlebot)—which parts of your site they’re allowed to access. If a page is blocked, Google won’t crawl it. And if it can’t crawl it, it can’t evaluate or rank it.

Sometimes, you’ll still see the URL listed as indexed, especially if it’s linked from other pages on your site or from external websites. But because Googlebot can't access the content, the page won’t rank well and might show up in search with missing or incomplete info.

Unless you’re purposely blocking something like a draft page, an under-construction section, a cart page, or a login screen—anything that truly shouldn’t appear in search—this kind of error is something you’ll want to fix.

Why a page might be blocked by robots.txt

There are several reasons why certain pages—or even entire sections of your site—might end up blocked by your robots.txt file:

Outdated disallow directives

During development or redesigns, it’s common to block pages using lines like Disallow: /test/ or Disallow: /staging/. But sometimes these rules get left behind, unintentionally hiding live content after launch.

SEO plugins automatically adding rules

In WordPress websites, plugins like Yoast SEO or Rank Math can automatically add disallow rules based on your configuration. For example, you might accidentally block tag archives, filtered product URLs, or paginated content if you enable certain toggles in the plugin’s settings.

On platforms like Shopify, SEO apps or theme code can influence how your pages appear to search engines, but control over the robots.txt file is more limited.

Wildcard misuse

Using broad rules like Disallow: /*.php or Disallow: /*? can block login pages, product detail pages, or even your entire search function, often without realizing it.

Platform defaults

Some platforms, like WordPress, come with default rules to block folders like /wp-admin/ or /search/. Shopify and other CMS platforms may generate a robots.txt file automatically, and you might not even realize what’s being excluded.

To avoid accidentally blocking important pages, always review your robots.txt file—usually stored in the root directory of your site—using your file editor, code editor, or platform dashboard.

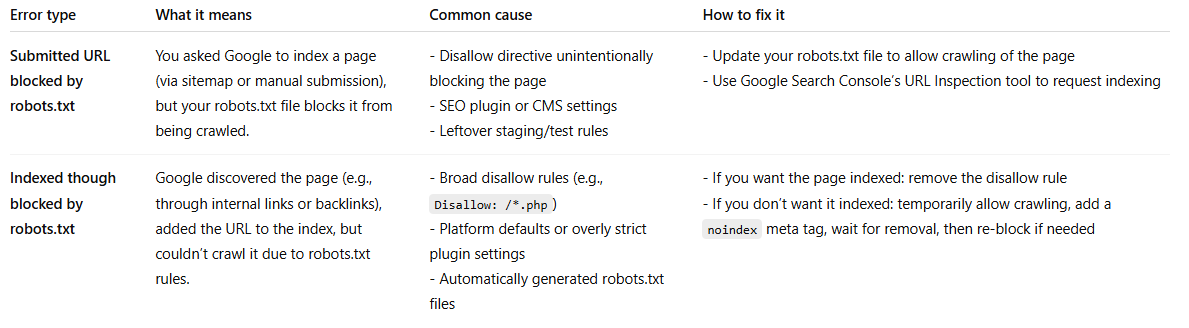

What is the "indexed though blocked by robots.txt" error?

The “indexed though blocked” message in your indexing report means Google has found the URL, likely through internal links on your site or backlinks from other websites, but wasn’t allowed to crawl the page because of a rule in your robots.txt file.

It’s not the same as the “submitted URL blocked by robots.txt” error, which shows up when you’ve actively asked Google to index a page (via a sitemap or manual submission). In contrast, “indexed though blocked” often appears when Google discovers the page on its own—and gets turned away at the door, so to speak.

Google might still show the page in search results, but it doesn’t actually know what’s on it. These URLs are indexed, but since the content is off-limits to Google’s crawler, all it can do is shrug and toss a placeholder into search—often with no description, no snippet, and zero chance of ranking well.

This can happen accidentally, especially if SEO plugins or your CMS automatically generate a robots.txt file that’s too restrictive.

To fix this:

- Allow crawling for affected pages by updating your robots.txt rules.

- Or, if the page really shouldn’t appear in search, allow crawling temporarily, add a noindex meta tag, and let Google re-evaluate the page properly before blocking it again.

Here's a quick comparison of the two errors and how to handle them:

How to fix "submitted URL blocked by robots.txt"

If Google’s throwing a “submitted URL blocked by robots.txt” error, here’s how to fix it before it costs you visibility in search. These steps apply whether you’re using built-in SEO tools, WordPress plugins, or a hosted CMS with limited file access.

1. Access your robots.txt file

Head to yourdomain.com/robots.txt. This file usually lives in your site’s root directory and controls how search engines crawl your site. You can access it through your SEO plugin, your host’s file manager, or sometimes under general settings—depending on your setup.

2. Look for disallow directives

Find the line that’s blocking the page in question. It might be as specific as Disallow: /product-page.html or as broad as Disallow: /store/, which could unintentionally block specific directories you actually want indexed.

A single mistyped line can stop important URLs from being indexed, so read carefully.

3. Use the Google Search Console Robots.txt Tester

Paste in the blocked URL and see which rule is causing the conflict. It helps explain why Google found the page but couldn’t access it to evaluate or index the content.

4. Edit the file

Adjust or remove the offending Disallow line. You can do this through your SEO plugin or directly in your site’s file manager. Just make sure you're not accidentally blocking something important—or exposing a draft page that isn’t ready for search engines.

5. Request Google to re-crawl

Go back to Google Search Console, use the URL Inspection tool, and click Validate Fix. This prompts Google to take another look, which speeds up the re-crawling process and can help get the page properly indexed again.

How to fix "indexed though blocked by robots.txt"

The fix here depends on what you actually want Google to do with the page. Do you want it indexed? Or do you want it to vanish from search?

If the page should be indexed

- Edit your robots.txt file to remove or adjust the disallow rule so Googlebot can crawl the URL. Think of it as opening the door instead of just letting Google peek through the window.

If the page shouldn’t be indexed

- Add a noindex meta tag to the page. This tells Google, “You can look, but don’t list this one.”

- Temporarily allow crawling in your robots.txt file so Google can access the page and see the noindex tag.

- Wait for Google to process the change and remove the page from search results.

- Once it’s gone, block it again in robots.txt if you still don’t want the page crawled going forward.

What not to do with your robots.txt file

A robots.txt file may look simple, but a single misplaced line can quietly tank your visibility. Here are a few common mistakes to avoid:

- Don’t block important pages you want ranked: Accidentally blocking /blog/ or /products/ can prevent search engines from accessing content you actually want to appear in search results.

- Don’t rely on robots.txt to remove pages from Google’s index: Blocking a URL in robots.txt only stops it from being crawled. If Google has already discovered the page, especially through external links, it may still appear in search. To fully remove it, use a noindex meta tag.

- Don’t forget to test your edits: Use the Robots.txt Tester in Google Search Console to confirm your changes are working as expected. Even small errors can unintentionally block access to key pages or entire directories.

Still seeing "blocked by robots.txt" after fixing?

If you've made changes to your robots.txt file but Google still shows the page as blocked, don’t panic. Google doesn’t crawl your site in real time, and it may take a few days (or longer) for updates to be reflected in Search Console.

Here’s what to do in the meantime:

Check your site’s live robots.txt file

Visit yourdomain.com/robots.txt or access it through your hosting provider’s file editor or the SEO tools panel in your CMS. If you're using WordPress, you can often find it in the left-hand menu under your plugin or settings area.

Make sure your changes were saved and published correctly.

Use the URL Inspection tool in Google Search Console

Enter the affected URL to see its current crawl status. If it still shows as blocked, click “Request Indexing” to ask Google to revisit the page and apply your recent changes.

Monitor the Page Indexing report

Keep an eye on the status in Search Console over the next few days. This will confirm whether the update has been processed and if the URL is now eligible for indexing.

If everything is configured correctly, the error should clear up after Google re-crawls your site. If it doesn't, there may be another blocking rule or issue worth investigating.

Final checks: Make sure your pages are visible to Google

Once you’ve addressed any robots.txt issues, take a few extra steps to ensure your important pages are actually being seen by search engines:

- Use tools like Google Search Console or trusted SEO platforms to test crawlability and indexing status.

- Check key URLs with a site:yourdomain.com/page search to confirm they’re appearing in search results.

- Review your robots.txt file to make sure no critical pages or directories are being blocked unintentionally.

- Re-submit important URLs through the URL Inspection tool to prompt Google to revisit them.

- Use your SEO plugin or CMS settings to stay on top of site structure and file access as your content evolves.

Need help troubleshooting indexing issues on your site? Request a free ecommerce SEO audit to fix blocked URLs and boost your site's visibility in search.

Up Next

Interested in detailed step-by-step methods to grow your account?